“AI isn’t magic” - It’s Statistics on Steroids

Welcome to the first day of your deprogramming from AI hype. Today we’re going to kill the biggest lie in tech: that AI “thinks” like you do.

The $1.8 Trillion Dollar Probability Machine

Let me start with a number that will blow your mind: 1.8 trillion.

That’s how many “parameters” (fancy word for adjustable knobs) GPT-4 reportedly has. Each one of these knobs gets tweaked during training to help the model predict what word comes next.

Not “understand” what word comes next.

Not “reason about” what word comes next.

Just predict it. Like a cosmic autocomplete on performance-enhancing drugs.

Here’s what those trillion parameters actually do: They’re weights in a massive mathematical operation called matrix multiplication. It’s taking the dot product of rows and columns - the same math you learned (and forgot) in high school, just scaled up to an absolutely insane degree.

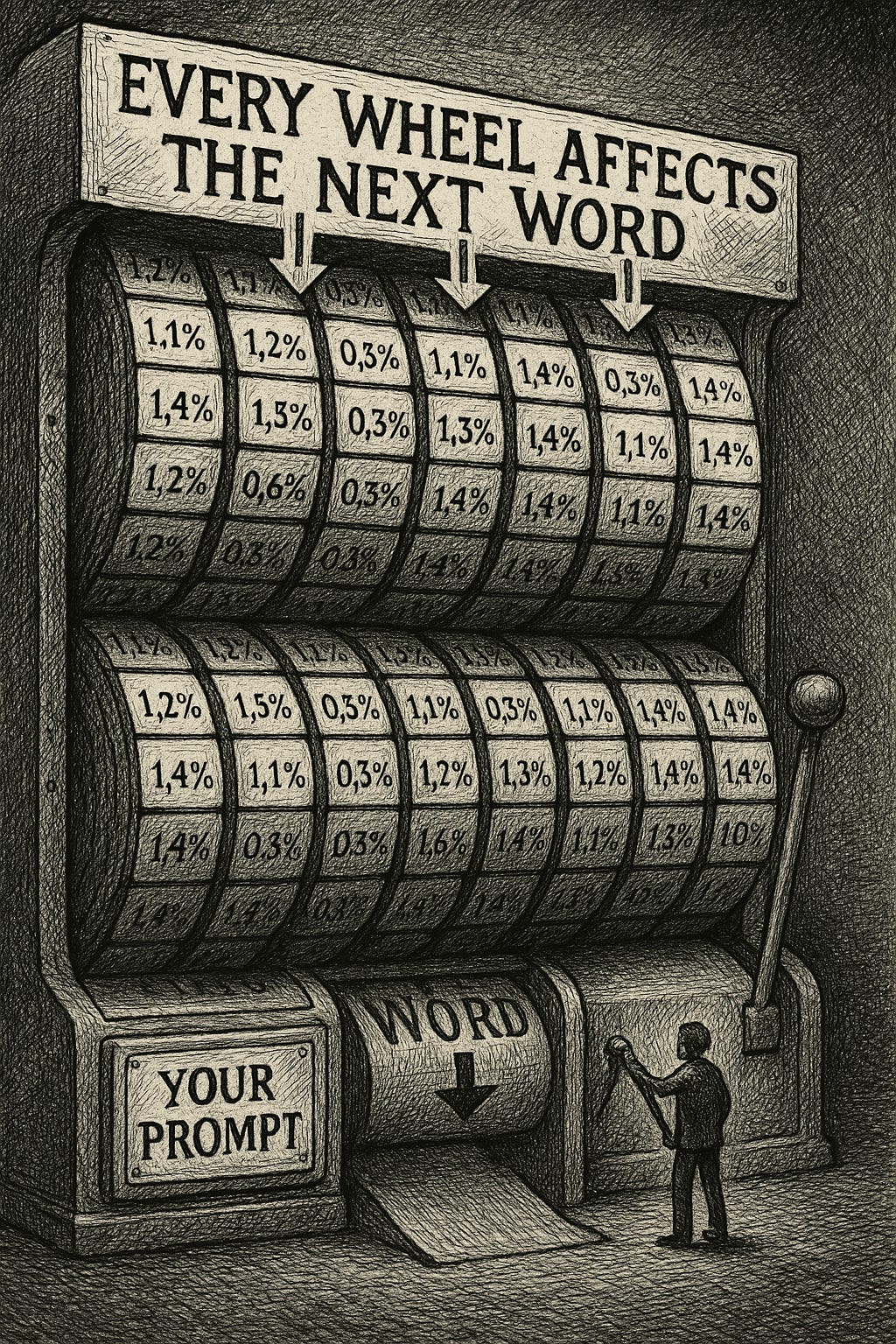

[Illustration 1: “The Parameter Casino”]

It’s Just Markov Chains on Steroids (Very Expensive Steroids)

Remember those old text generators that would create hilarious nonsense by looking at word patterns? Mark V. Shaney, a third-order Markov chain program from the 1980s, would look at sequences of three words and predict what comes next based on probability.

ChatGPT is doing the exact same thing, just with more context and fancier math. Instead of looking at 3 words, it can look at thousands. Instead of simple probability tables, it uses those trillion parameters. But fundamentally? It’s still just calculating the probability that each word follows the previous words.

The only difference between ChatGPT and your phone’s autocomplete:

Your phone: “After ‘The cat sat on the...’ probably comes ‘mat’ (65% chance) or ‘chair’ (20% chance)”

ChatGPT: Does this for every possible word in its vocabulary, using matrix multiplication that combines and transforms input data as it passes through layers

[Illustration 2: “Evolution of BS”]

The Hilarious Truth About “Understanding”

Here’s my favorite way to explain this to people who think ChatGPT “understands” them:

ChatGPT has read more text than any human ever could - trained on approximately 13 trillion tokens including CommonCrawl, RefinedWeb, Twitter, Reddit, YouTube, and a large collection of textbooks.

But it understands that text the same way a photocopier understands the documents it copies.

When you ask ChatGPT a question, here’s what actually happens:

Your words get converted into numbers (tokens)

Those numbers get multiplied by matrices... a lot

Linear transformations project the input vector to the output vector

Out pops the most statistically likely response

It’s not thinking. It’s doing math. REALLY fast math, but still just math.

[Illustration 3: “The Understanding Illusion”]

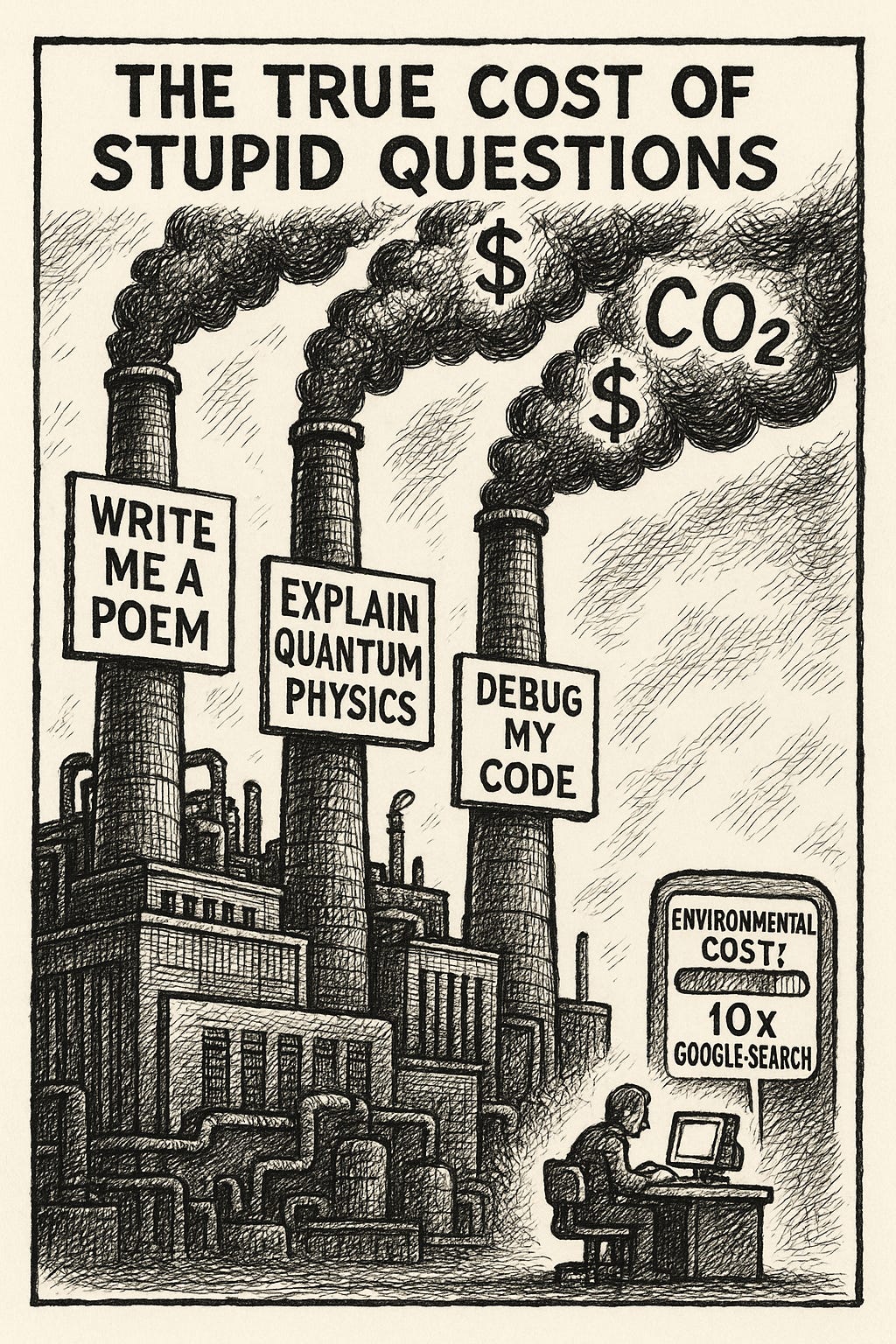

The Energy Cost of Fake Intelligence

Now here’s where it gets really stupid. All this statistical masturbation isn’t free.

Every time you ask ChatGPT a question:

It uses approximately 2-3 grams of CO2

That’s nearly 10 times as much electricity as a Google search

Each query consumes about 0.3 to 0.4 watt-hours of electricity

Why? Because running a model with a trillion parameters requires a minimum of 14 terabytes of memory. That’s not running on your laptop. That’s running on thousands of specialized GPUs that cost more than your house.

Meanwhile, your brain - which actually DOES understand things - runs on about 20 watts. The same as a dim light bulb.

[Illustration 4: “The True Cost of Stupid Questions”]

Why This Matters (The Part Where I Save You Money)

Understanding that AI is just statistics isn’t academic. It’s practical.

When you know it’s just predicting probable next words, you understand why:

It makes up “facts” with complete confidence

It can’t actually reason about math (it’s pattern matching to problems it’s seen)

It gets worse at tasks that don’t appear often in training data

It can “hallucinate” or misinterpret facts, especially about recent events

This is why every AI company is burning cash at an astronomical rate. GPT-4 cost an estimated $78 million worth of compute just to train. And they have to keep retraining because, remember, it doesn’t “learn” from your conversations - it’s frozen in time, just running statistics.

[Illustration 5: “The Money Furnace”]

Your BS Detector Calibration Exercise

Try this today:

Ask ChatGPT: “How many R’s are in the word ‘strawberry’?”

Watch it fail this kindergarten-level task

Ask yourself: “If it understood language, how could it not count letters?”

The answer: Because it doesn’t see letters. It sees tokens (chunks of text). It’s never actually “looked” at the word strawberry letter by letter. It’s just seen the token “strawberry” millions of times and knows what words typically come before and after it.

That’s not intelligence. That’s a $78 million pattern matching machine that can’t count to three.

Tomorrow’s Lesson: “ChatGPT Doesn’t Think”

We’ll dive into why Large Language Models are just “autocomplete on steroids” and why that time Grandma told you ChatGPT “understood her feelings” was actually just statistical probability making her feel less alone.

Spoiler: The same math that generates Shakespeare quotes also generates absolute nonsense. The only difference is which training data it’s pulling from.

Remember: Every time someone says “AI understands,” a statistician loses their wings.