Listen, I got tired of the discourse.

Every week there’s a new “revolutionary” AI agent that’s supposedly going to replace all of us. LinkedIn is drowning in posts about how agents will transform everything by Q3. VCs are throwing money at anything with “autonomous” in the pitch deck.

So I did something a little weird. I created an AI co-host named NOVA and had an actual debate about whether AI agents are the future—or the most expensive autocomplete ever invented.

Spoiler: NOVA did not have a good time.

The Numbers Nobody Wants to Talk About

Here’s what I brought to the debate. Actual data. Verified sources. Not vibes.

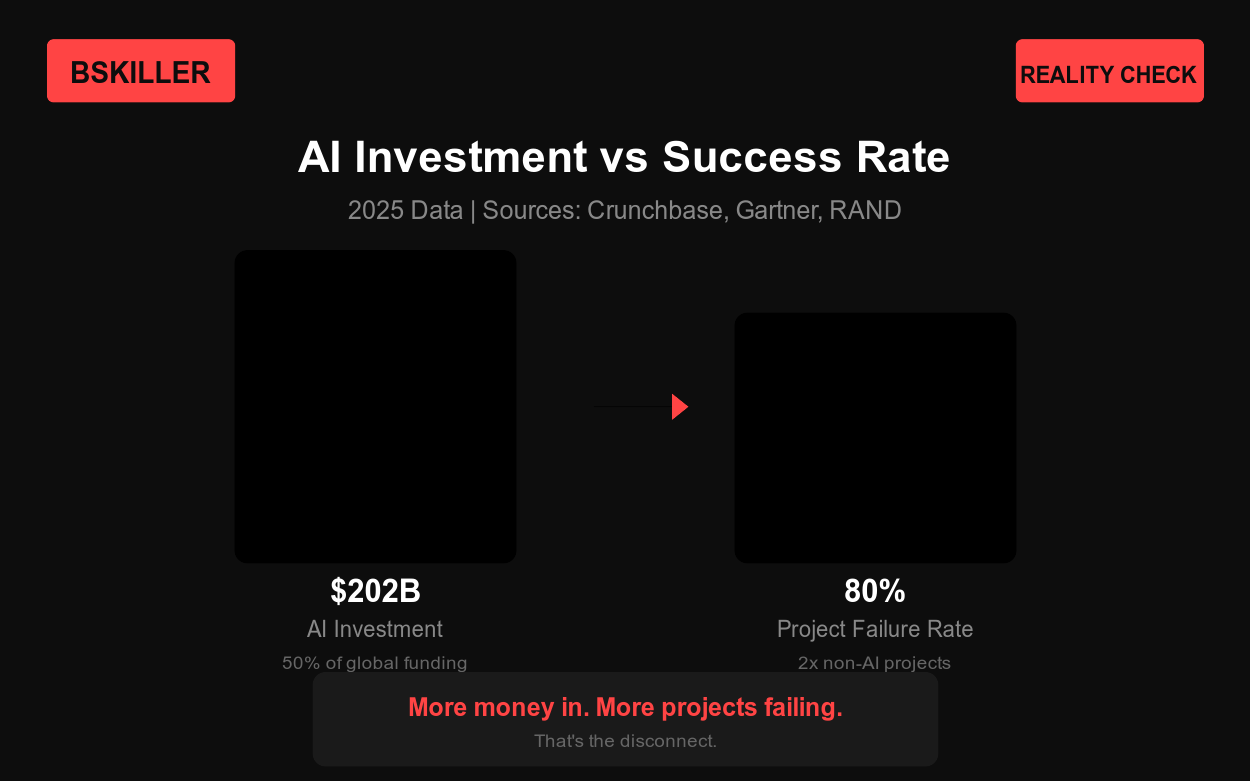

The $202 billion question: Total AI investment in 2025 hit $202 billion. Close to 50% of all global funding. (Crunchbase)

And yet.

80% of AI projects fail. Not my opinion. Gartner’s numbers. RAND Corporation confirmed it—that’s twice the failure rate of non-AI tech projects. (Gartner)

The Devin Reality Check

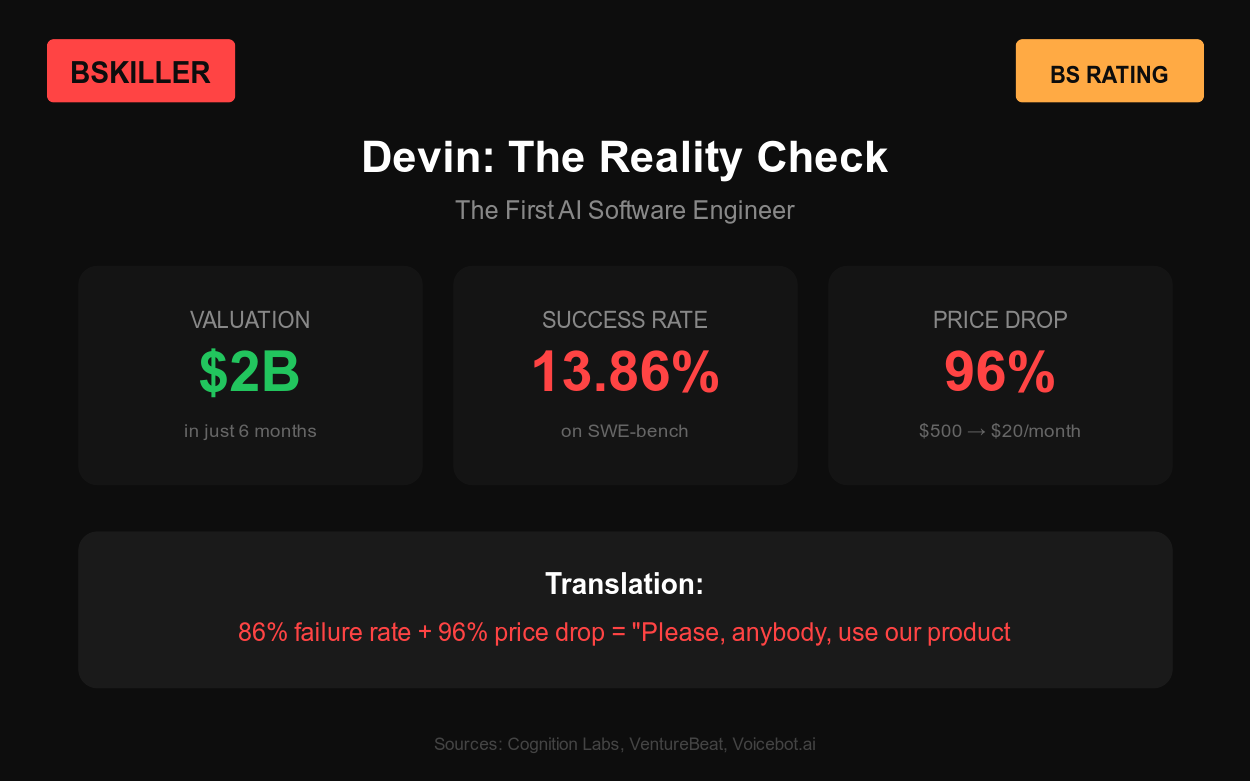

NOVA tried to defend Devin. The “AI software engineer” that raised headlines everywhere.

Here’s what I told her:

$2 billion valuation in just six months (Voicebot)

Actual success rate on SWE-bench: 13.86%. That’s an 86% failure rate. On curated tasks. In ideal conditions. (Cognition)

Pricing dropped from $500/month to $20/month. A 96% price cut. (VentureBeat)

When a product that supposedly works slashes prices like that? That’s not a sale. That’s a cry for help.

The AutoGPT Experiment

NOVA brought up AutoGPT. 180,000+ GitHub stars! People love it!

I tested it. Twenty real tasks.

Result: Three completed. Two of those had bugs I had to fix anyway.

The other seventeen? Infinite loops. API calls to endpoints that don’t exist. Files it hallucinated. At one point it argued with itself for eight minutes about tabs vs spaces.

It chose spaces. I’ve never been more disappointed in artificial intelligence.

What Actually Works

I’m not a doomer. I use AI tools daily. GitHub Copilot genuinely makes developers 55% more productive. (GitHub Study)

But Copilot isn’t an agent. It’s autocomplete. Very good autocomplete. You decide if the output is garbage. That’s the key difference.

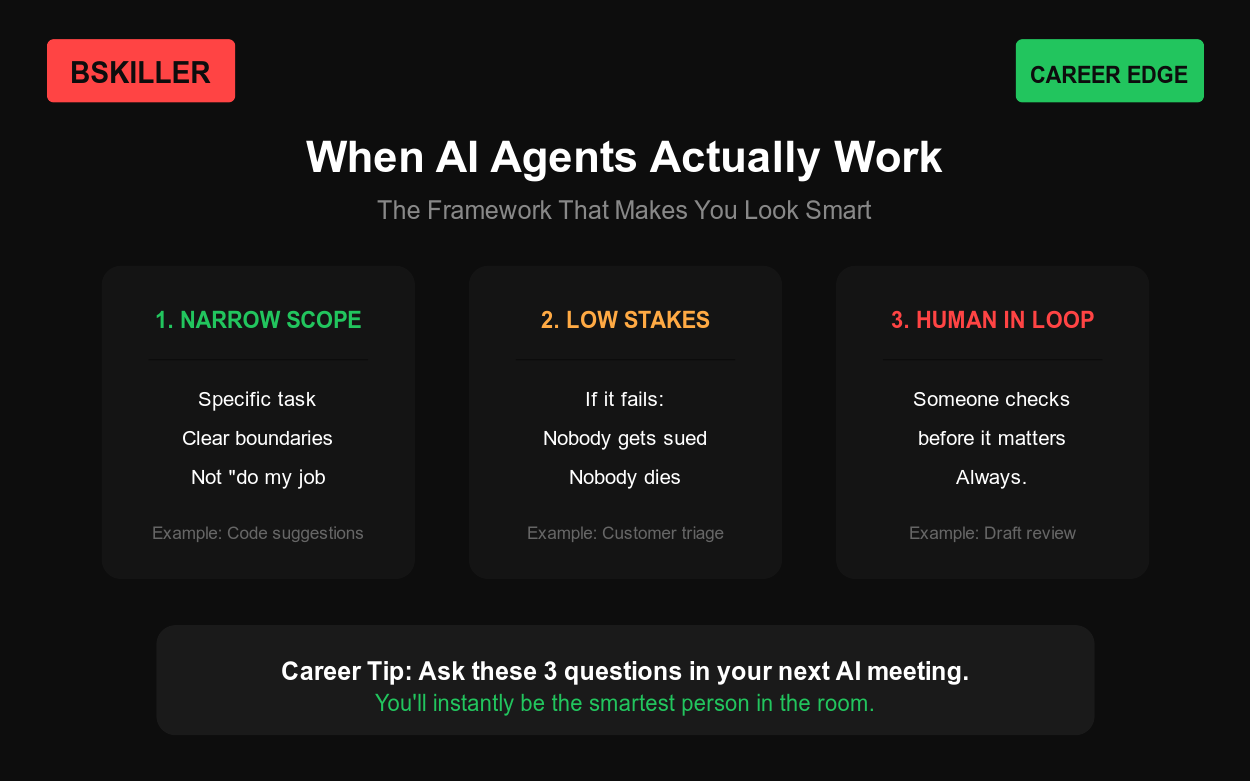

My framework for when agents actually work:

Narrow scope. A specific task with clear boundaries. Not “do my job for me.”

Low stakes. If it screws up, nobody gets sued and nobody dies.

Human in the loop. Someone checking output before it matters.

Customer service triage? Great. Code suggestions? Perfect. Autonomous stock trading? Hope you have a good lawyer.

Career Move

Next time someone in a meeting says “let’s deploy an AI agent to handle this autonomously,” be the person who asks three questions:

What’s the failure rate?

Who reviews the output?

What’s the plan when it breaks at 2 AM?

Everyone will look at you like a genius. You’re not. You just have pattern recognition.

The Tesla Lesson

NOVA argued the trajectory is incredible. GPT-3 couldn’t write a paragraph. GPT-4 passes bar exams!

Sure. You know what else was supposed to be here by now?

Self-driving cars. Fully autonomous. Level 5.

In April 2017, Elon Musk promised a Tesla would drive coast-to-coast by year end. Quote: “No controls touched at any point during the entire journey.” (Wikipedia)

It’s 2026. Still waiting. Meanwhile Teslas keep crashing into fire trucks like they have a personal vendetta against emergency services.

The tech is impressive. But that last 10% of reliability is brutal.

The Bottom Line

AI agents aren’t BS. The claims about them are.

The technology is real. The marketing is fantasy. Use agents as tools, not replacements. Verify what they output. And whenever someone promises fully autonomous anything—ask for the failure rate.

Then ask for the real failure rate. The one without marketing polish.

The 3% who understand AI reality will dominate the next decade.

You’re now one of them.

—Pran

P.S. — If you found this useful, share it with someone who’s about to spend $200k on an “AI transformation initiative.” They need this more than you do.