Here's a number that should terrify anyone buying AI software:

That’s not my opinion. That’s RAND Corporation research. Twice the failure rate of regular IT projects.

It gets worse. S&P Global’s 2025 data shows 42% of companies abandoned most of their AI initiatives this year. Up from 17% last year. The average organization scrapped 46% of AI proof-of-concepts before they reached production.

And MIT’s 2025 report? Only 5% of generative AI pilots achieve meaningful business impact. The other 95% stall or die.

This isn’t a technology problem. It’s a bullshit detection problem.

Most AI vendors are selling demos, not products. The companies buying them don’t know how to tell the difference.

I do. And last month, it saved a client $100K.

The Setup

A client called me in a panic. They were about to sign a $100K/year contract with an AI vendor. “Document processing powered by AI.” Invoices go in, structured data comes out. No more manual data entry.

The demo was slick. The sales team was confident. “99.2% accurate.”

“Can you sit in on one more call? Make sure we’re not missing anything?”

I asked three questions. The deal collapsed in ten minutes.

Question 1: “What’s your error rate, and how did you measure it?”

What they said: “Our AI is 99.2% accurate.”

What I asked next: “Measured against what dataset? How many documents? What document types?”

What happened: Long pause. “That’s from our internal benchmarks.”

“How many documents?”

“I’d have to check with engineering.”

“Were they real customer documents or synthetic test data?”

Silence.

Why this question works:

Informatica’s 2025 CDO survey found that 43% of AI failures trace back to data quality issues. Vendors benchmark on clean, curated datasets. Your data is messy. The gap between “benchmark accuracy” and “production accuracy” is where projects die.

What good answers sound like:

“We measured on 50,000 real customer documents across 12 different formats. Accuracy was 94.3% overall, dropping to 87% on handwritten annotations.”

“Here’s our methodology document. We update benchmarks quarterly with actual production data.”

What bad answers sound like:

“Very accurate” (no number = no measurement)

“99%+” (meaningless without methodology)

“Internal benchmarks” (translation: cherry-picked)

Question 2: “Can you show me it failing?”

What they said: “We don’t really have failure cases to show.”

What I asked next: “In all your deployments, you’ve never had a document it couldn’t process?”

What happened: “Our system handles pretty much everything. Maybe some handwritten notes...”

“Show me.”

“We’d need to set that up.”

Why this question works:

Every AI system has failure modes. Every single one. The Gartner data showing 70-85% of GenAI deployments fail to meet ROI isn’t because the technology doesn’t work. It’s because buyers never saw how it breaks before they bought it.

If a vendor can’t show you failures, they either:

Haven’t operated at real scale

Know exactly how it breaks and are hiding it

Both are disqualifying.

What good answers sound like:

“Here are three failure cases from last month. This invoice had overlapping text, we got 60% of fields. This one had a watermark that confused the OCR. This one was a format we’d never seen.”

“We maintain a failure taxonomy. Currently tracking 23 distinct failure modes with mitigation strategies for each.”

What bad answers sound like:

“We don’t really see failures” (lying or hasn’t scaled)

“Let me show you another success” (deflection)

“That’s an edge case” (your production is nothing but edge cases)

Question 3: “Who’s been running this in production for more than 6 months, and can I talk to them?”

What they said: “We have several enterprise pilots underway.”

What I asked: “Can I speak with someone running it on real data?”

What happened: “Due to confidentiality agreements, we can’t share customer names.”

“You don’t have a single reference I can call?”

More silence.

Why this question works:

“Works in demo” and “works in production” are completely different things. S&P Global found that 46% of AI POCs get scrapped before production. The graveyard is full of impressive demos.

If no one’s running it in production after 6+ months, there’s a reason:

It doesn’t scale

Hidden costs killed the ROI

The accuracy claims didn’t hold up

You’re the guinea pig

What good answers sound like:

“Company X has been running this for 18 months, processing 50K documents/day. Here’s their CTO’s email, she’s happy to chat.”

“We have 12 production customers. Three are in your industry. I can set up reference calls with any of them.”

What bad answers sound like:

“Several pilots” (pilots aren’t production)

“NDA prevents sharing” (convenient excuse)

“We’re early stage” (you’re the test subject)

The Aftermath

I told the client: “Before you sign, let’s run a 30-day pilot with YOUR documents.”

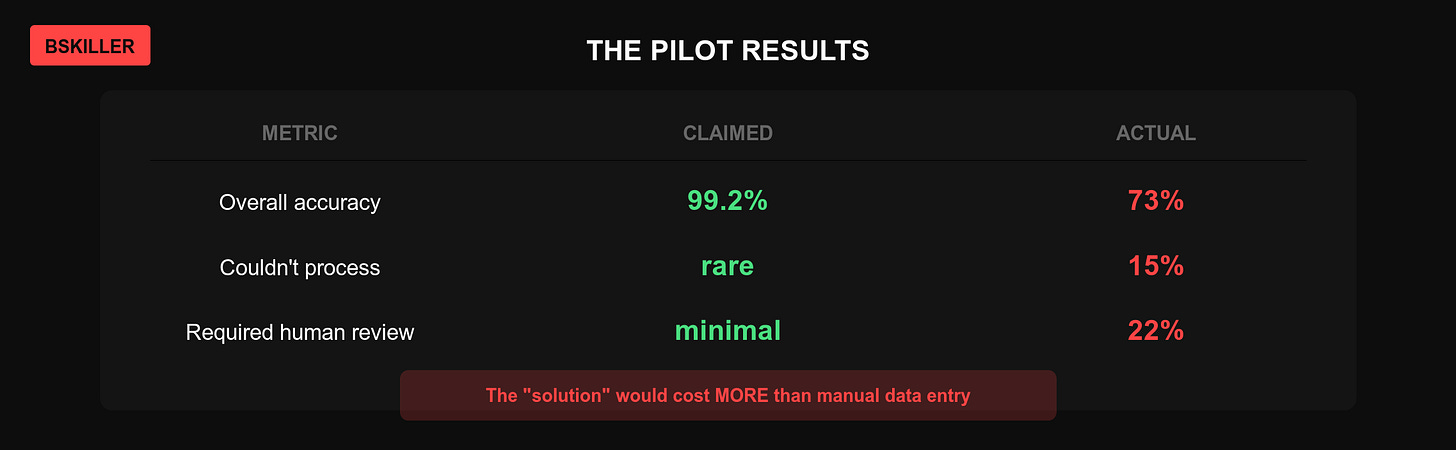

The vendor agreed. We sent 500 real invoices from the client’s actual workflow.

The results:

The “$100K AI solution” would have cost more in human cleanup than doing data entry manually.

The client walked away. That’s $100K in direct savings, plus the hidden costs of a failed implementation, which RAND estimates run 2-3x the initial contract value when you factor in integration, training, and organizational disruption.

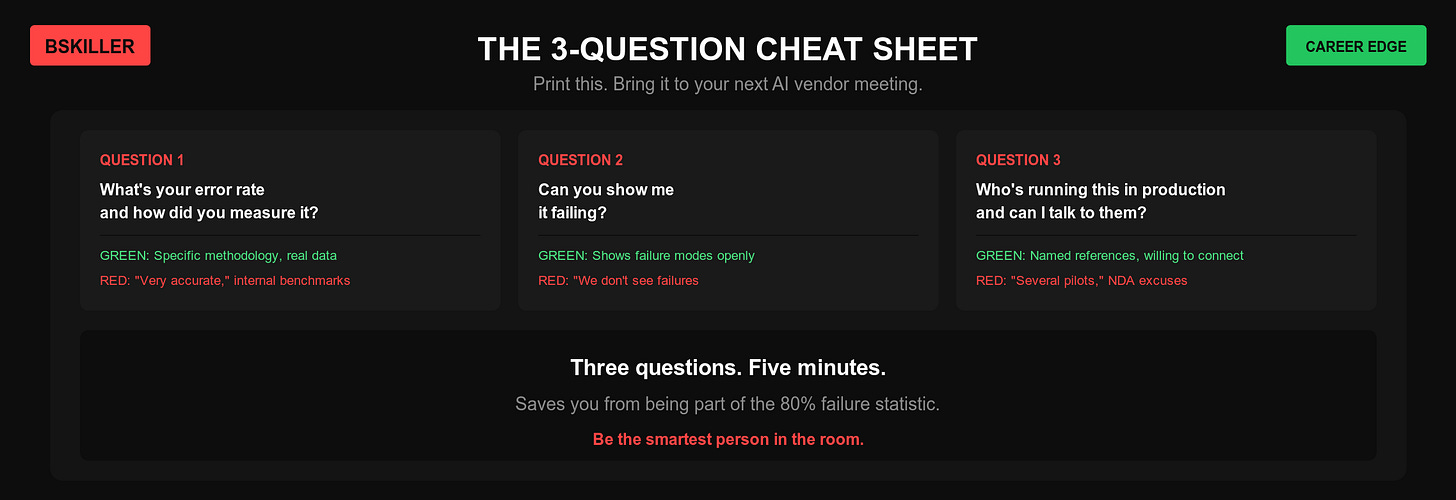

The Cheat Sheet

Print this. Bring it to your next AI vendor meeting.

Three questions. Five minutes. Saves you from being part of the 80% failure statistic.

5 More Questions For Your Arsenal

The three questions above catch the obvious fakes. These five catch the subtle ones.

4. “What happens when your system is wrong and we don’t catch it?”

You’re testing: Do they have error recovery? Audit trails? Rollback procedures?

Bad answers: “That rarely happens” or “Users catch errors in review.”

Good answers: “Every output includes a confidence score. Below 85%, it’s flagged for human review. We maintain full audit logs with 90-day retention. Here’s our incident response playbook.”

5. “What’s your total cost of ownership, not just the license fee?”

You’re testing: Are they hiding infrastructure, integration, and maintenance costs?

Bad answers: “It’s just $X per month” or “Integration is straightforward.”

Good answers: “License is $X. Typical integration costs run $Y-Z depending on your stack. Ongoing compute costs average $W per 1000 documents. Here’s a TCO calculator based on your volume.”

6. “What happens to my data, and where does it go?”

You’re testing: Data residency, training policies, and security posture.

Bad answers: “Your data is secure” or “We follow best practices.”

Good answers: “Data stays in your chosen region. We never train on customer data. Here’s our SOC 2 Type II report. Here’s our data processing agreement with specific retention policies.”

7. “What’s your roadmap for the next 12 months?”

You’re testing: Is this a real product or a feature searching for a use case?

Bad answers: “We’re focused on AI capabilities” or “Lots of exciting things coming.”

Good answers: “Q1: Multi-language support. Q2: API v2 with batch processing. Q3: On-prem deployment option. Here are the three features our top customers requested, and when they’re shipping.”

8. “What happens if you get acquired or shut down?”

You’re testing: Business viability and exit strategy for your data.

Bad answers: “We’re well-funded” or “That won’t happen.”

Good answers: “We have 24 months runway. If we’re acquired, contracts transfer. If we shut down, you get 90 days notice and full data export. Here’s the relevant clause in our contract.”

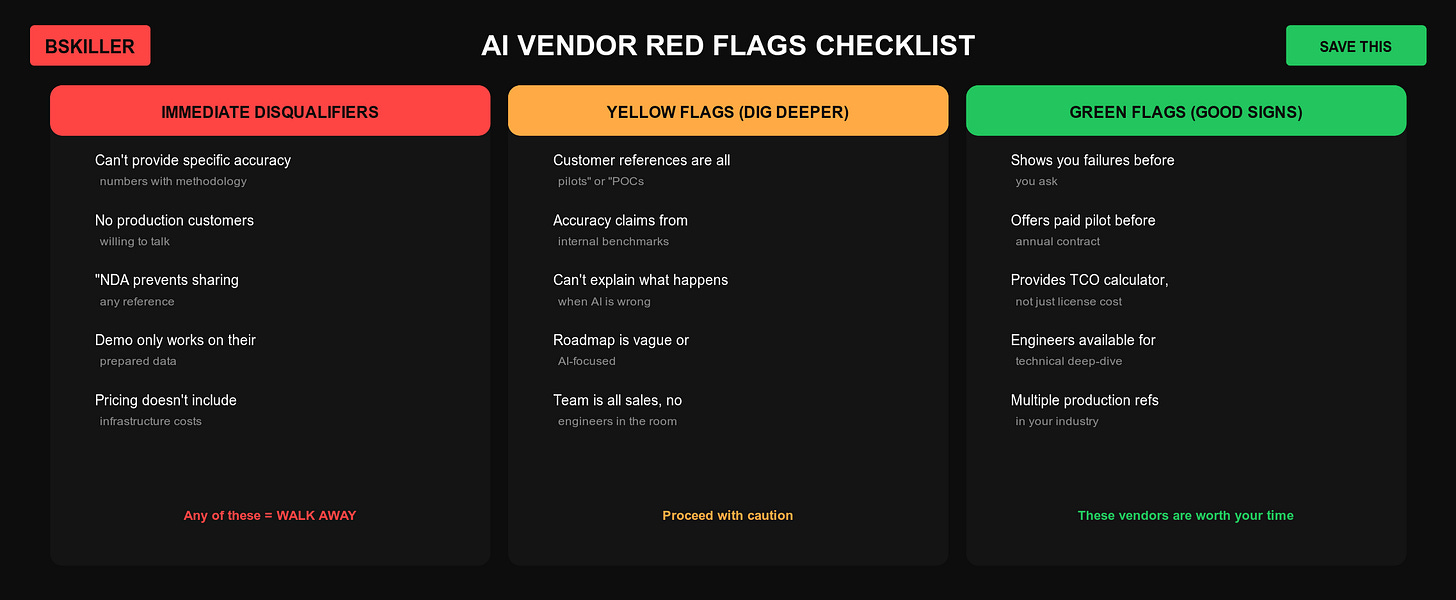

Red Flags Checklist

Save this. Screenshot it. Use it in every AI vendor evaluation.

The text version (for copy-paste):

Immediate disqualifiers:

Can’t provide specific accuracy numbers with methodology

No production customers willing to talk

“NDA prevents sharing” any reference

Demo only works on their prepared data

Pricing doesn’t include infrastructure costs

Yellow flags (dig deeper):

Customer references are all “pilots” or “POCs”

Accuracy claims from “internal benchmarks”

Can’t explain what happens when AI is wrong

Roadmap is vague or “AI-focused”

Team is all sales, no engineers in the room

Green flags (good signs):

Shows you failures before you ask

Offers paid pilot before annual contract

Provides TCO calculator, not just license cost

Engineers available for technical deep-dive

Multiple production references in your industry

The Career Play

Here’s what nobody tells you about asking these questions:

When you’re the person who asks about error rates in a room full of nodding heads, you look like the only adult present.

When you request failure demos, you signal you’ve actually deployed AI before.

When you push for reference customers, you demonstrate the due diligence that executives respect.

McKinsey’s 2025 AI survey found that organizations with “significant” AI returns are twice as likely to have rigorous evaluation processes before deployment.

The person who asks hard questions gets invited to important meetings. The person who nods along gets blamed when the project joins the 80% failure pile.

What This Means For You

Whether you’re technical or not, these questions work. You don’t need to understand how AI works. You need to understand how vendors dodge accountability.

If you’re:

Buying AI - These questions protect your budget and reputation

Selling AI - If you can’t answer these, fix your product before your pitch

Building AI - This is the bar your customers should hold you to

Managing AI projects - This is your pre-flight checklist

In meetings where AI comes up - This is how you become the trusted voice

The AI market is drowning in vapor. The people who can separate signal from noise will dominate the next decade. The people who nod along will wonder what happened to their careers.

One More Thing

I put together everything I know about detecting AI bullshit. Not theory. Patterns from actually building and buying AI systems.

What’s in BSKiller each week:

Vendor teardowns - I analyze real AI products. What’s solid, what’s smoke.

Production patterns - Code and architectures that actually work at scale

Cost reality - What AI really costs (vendors hide 40-60% of total spend)

Career positioning - How to be the AI-literate person every team needs

700+ ambitious professionals already get it. Whether they’re technical or not, they refuse to fall behind while AI reshapes their industry.

The 3% who understand AI reality will dominate the next decade.

Sources

Get ahead with AI—without falling for the hype.

Thanks for sharing. It resonates with what I see in the industry.

I'm on the vendor/builder side and these questions are a wakeup call.

Thanks a lot for posting this.