Why Nobody Explained Loss Functions Like This Before: 7 Simple Doodles That Finally Make Sense

The mathematical heart of AI, stripped of unnecessary complexity and jargon.

Every AI expert I've ever met has the same strange blind spot.

They can build neural networks that recognize faces, generate essays, or drive cars – but ask them to explain how these systems learn, and suddenly they're speaking an alien language:

"The stochastic gradient descent optimizer minimizes the cross-entropy loss function by computing partial derivatives with respect to the model parameters..."

Stop. Please. We're begging you.

After teaching thousands of engineers and watching their eyes glaze over during the "loss function" lecture, I finally decided to solve this problem with something radical: simple pictures that normal humans can understand.

The 7 Doodles That Will Finally Make Loss Functions Click

Here's the zero-fluff explanation of loss functions that nobody bothered to give you. No equations. No Greek symbols. Just visual intuition that would have saved you weeks of confusion.

1. Reality vs. Prediction: The Basic Problem

"Loss = how far each guess misses the mark."

Imagine a dartboard labeled "Truth." Your AI model is throwing darts labeled "Guesses."

The distance between each dart and the bullseye? That's your loss.

When your model predicts a cat is 80% likely to be in an image, but the truth is 100% (there definitely is a cat), that difference is your error – your miss.

This is the whole game of machine learning: get those darts to land closer to the truth.

2. Per-Sample Error: Making It Measurable

"Single miss ⇒ single error number."

For every prediction your model makes, you need to measure how bad the miss was.

The tape measure stretching from your dart to the bullseye gives you a single number. This is crucial: you're converting a wrong prediction into a measurement.

For a house price prediction that's $50,000 too high, that distance is 50,000. For a spam filter that's 30% uncertain when the email is definitely spam, that distance is 0.3.

This conversion of mistakes into numbers is the first magic trick of machine learning.

3. Aggregating Errors: From Many to One

"Turn a pile of misses into one score the optimizer can chase."

Your model makes thousands or millions of predictions. How do you summarize all those errors?

Imagine all those individual error measurements as tiny cubes falling into a bucket marked "Σ" (the sum symbol). When training is done, you have a bucket containing all errors from all predictions.

Typically, we take the average – divide by the number of predictions – to get the mean error.

This single number is what your entire AI optimization process will try to minimize.

4. MSE vs. MAE: Why We Square Errors

"Square the distance ⇒ punish big blunders harder, give steeper gradients."

Here's where it gets interesting. Consider two ways to measure error:

Just use the raw distance (Mean Absolute Error or MAE)

Square the distance, then average (Mean Squared Error or MSE)

Picture two hills: MAE is gentle and has consistent steepness. MSE is much steeper at the top, more gradual at the bottom.

Why does this matter? When your model makes a huge mistake (a dart that completely misses the board), squaring that large error creates an even larger loss. This tells your model: "Fix the big mistakes first!"

More importantly, squaring creates steeper gradients exactly where your model needs the strongest correction signals.

This is why MSE dominates machine learning – it punishes stupidity more harshly than small mistakes.

5. Gradient = Compass: Finding Your Way Down

"Derivative tells the model which way shrinks the loss fastest."

Now your model has a single number representing its total failure. How does it improve?

Imagine a hiker on a contour map of error mountain. The gradient (∇Loss) is simply a compass that always points downhill in the steepest direction.

For each parameter in your model (weights and biases in a neural network), the gradient tells you: "If you adjust this knob a little, will the error go up or down, and by how much?"

The gradient is just a set of directions that tells your model which way to walk to reduce its mistakes.

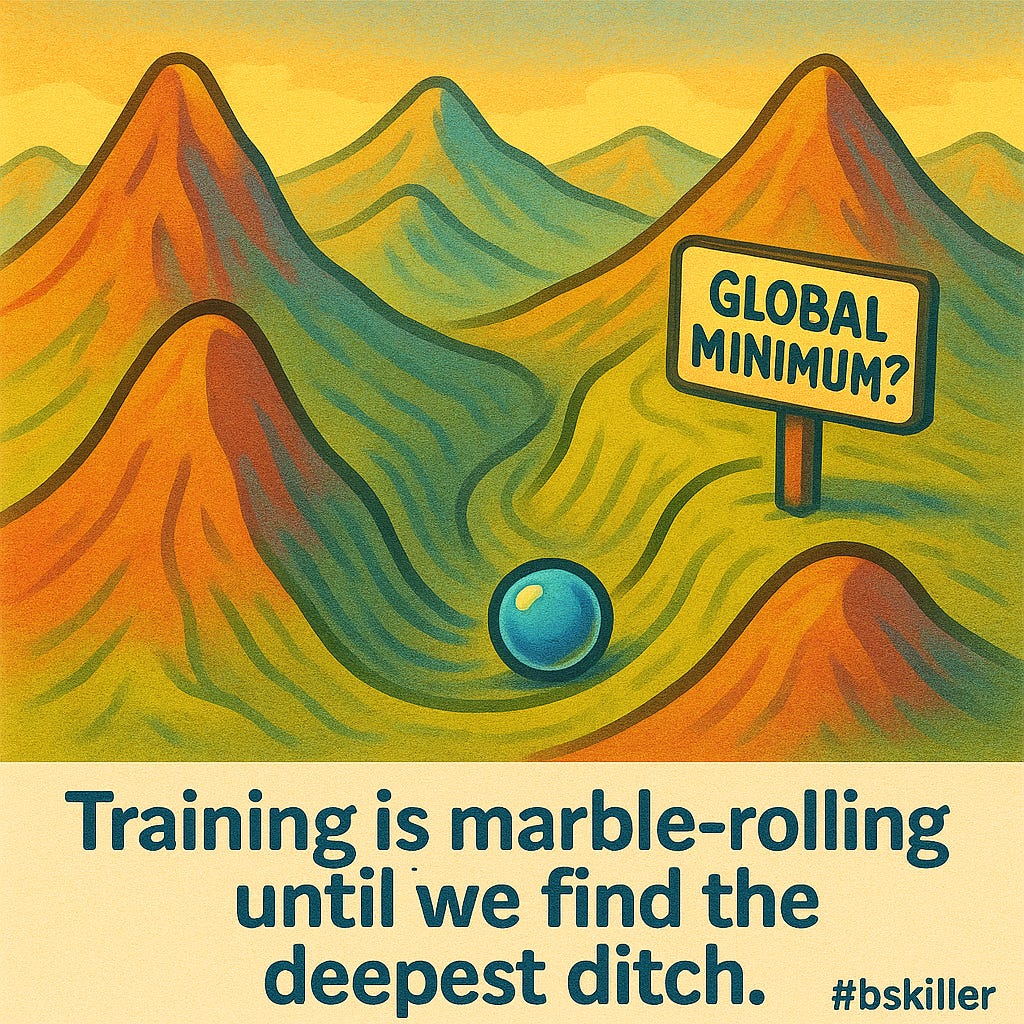

6. Loss Landscape: The Big Picture

"Training is marble-rolling until we find the deepest ditch."

Zoom out further. Your model has thousands or millions of parameters, each creating a dimension in a vast landscape of possible configurations.

The loss function creates mountains and valleys across this landscape. Training your model is like placing a marble at a random spot and letting it roll downhill.

Sometimes it finds the global minimum (the lowest possible error). Sometimes it gets stuck in a local minimum (a ditch that's not the deepest possible one).

This is why initialization and learning rates matter – they determine where your marble starts and how quickly it rolls.

7. Generalization Check: The Final Test

"Low training loss? Great—but does it stick when the board moves?"

The final doodle shows two dartboards: "Training" and "Reality."

On the training board, your model's darts land in a tight cluster near the bullseye. Congratulations – low training loss!

But the real test is the second dartboard: when you move to new, unseen data, do your darts still cluster near the bullseye? Or do they scatter widely?

This reveals the true aim of machine learning: not just to memorize the training data, but to generalize patterns that work on new examples.

Why This Matters

These seven simple pictures contain the core ideas behind every neural network, recommendation system, and AI model on the planet.

The engineer who truly understands these concepts can:

Debug models when they fail

Choose appropriate loss functions for different problems

Avoid common training pitfalls

Explain complex AI systems to stakeholders

Most importantly, they can cut through the dense mathematical fog that surrounds machine learning and focus on the ideas that actually matter.

Now What?

Next time someone tries to intimidate you with complex loss function equations, remember these doodles.

The core ideas behind machine learning aren't actually that complicated – they've just been buried under layers of notation and jargon that make simple concepts seem impenetrable.

What other ML concepts would you like to see broken down into simple visuals? Let me know in the comments.

This is the first in my "ML Explained Through Doodles" series. Subscribe for visual explanations of backpropagation, attention mechanisms, transformers, and more – using nothing more than simple black and white sketches that actually make sense.

Great article. Responding to your text - "What other ML concepts would you like to see broken down into simple visuals?" ---- I am fascinated by one paper from Yoshua Bengio related to "Evolving Culture vs Local Minima" on the year 2012. It always fascinated me how "culture" increases our learning curve. I understood the basic concept but it's difficult to grasp the mathematical construct explained there. In case you are interested, you can add a post on that. I am seeing an interest in preserving culture through LLM as well. As an example, Rabindranath Tagore is one of the Noble Laurette who has written many songs, poems, short stories, etc. It has an unique style and a Bengali culture cannot be understood without him. He has written texts related to the emotions that can be felt by every human being on earth. But simply translating his texts into English means, it looses it's meaning entirely. You can write related concepts if you want.